Introduction

The development of new technologies in the field of the automatic treatment of human language are essential for an effective utilization of new multimodal and multimedia systems built with the eventual goal of enabling people to communicate with machines using natural communication skills. Advances in human language technology (HLT) offer the promise of nearly universal access to on-line information and services. Since almost everyone speaks and understands a language, the development of spoken language systems will allow the average person to interact with computers without special skills or training, using common devices such as the telephone. The aim of this research is that of collecting and effectively reorganize all the studies developed at IFD in the field of HLT (speech analysis, text-to-speech synthesis, speech recognition, facial animation, dialogue in new learning and teaching system) with the goal of building a new integrated system for man-machine communication.

Research Focus

During the last years, advances in HLT technologies, essentially in the field of speech, text and image processing, have been incredibly accelerated. The man-machine systems built with this technology focus on the recognition of user's spoken words, on the interpretation of their meaning, on the activation of an adequate response, and on the final checking of its effectiveness.

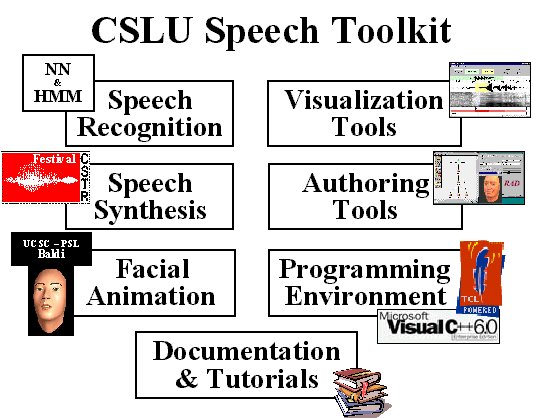

It is with the goal of building a system able to allow people to interact with computers using speech to obtain information on virtually any topic, to conduct business and to communicate with each other more effectively, that we are developing the Italian version of the "CSLU Speech Toolkit " [1] [2] illustrated in Figure 1. For that objective, we are cooperating with the "Center for Spoken Language Understanding" (CSLU) of "Oregon Graduate Institute" (OGI) in Portland and with the "Center for Spoken Language Research" (CSLR) of "Colorado University" (CU) in Boulder. Essentially, we focused on the realization of an integrated system for Italian automatic speech recognition, text-to-speech generation and facial animation.

Figura 1. CSLU-Speech Toolkit.

As for speech recognition, our efforts are focused on the automatic recognition of connected digit sentences pronounced via both microphonic and telephonic channel, and on Italian "general purpose" recognition. In particular a system characterized by an hybrid architecture based on artificial Neural Networks (NN) and Hidden Markov Models (HMM) has been designed. For this project various speech corpora have been utilized: some of them were built by "Istituto per la Ricerca Scientifica e Tecnologica" (IRST) in Trento and some other by "Centro Studi e Laboratori Telecomunicazioni" (CSELT) of TELECOM in Torino, and with both Institutions we have a strict cooperation agreement.

As for Italian Text-To-Speech (TTS), all the knowledge developed during the past in this field by IFD, have been used to develop the first Italian version of "Festival" [3], a software package, developed by "Centre for Speech Technology Research" (CSTR) of Edinburgh University in Edinburgh, which is particularly suitable to be utilized for the implementation of multilingual TTS systems.

Finally, regarding Facial Animation techniques [4], we started various activities devoted to the definition of a new "talking agent" which should be able to give an adequate audio-visual feed-back to the user in order to improve the naturalness and friendliness of the system.

In order to test the system, an application related to language learning acquisition will be designed and to that aim the most modern technologies for the development of new dialogue systems will be adopted.

Bibliographic References

[1] Fanty, M., Pochmara, J., and Cole, R.A. 1992. An Interactive Environment for Speech Recognition Research. In Proceedings of ICSLP-92, Banff, Alberta, October 1992, 1543-1546.

[2] Sutton, S., Cole, R.A., de Villiers, J., Schalkwyk, J., Vermeulen, P., Macon, M., Yan, Y., Kaiser, E., Rundle, B., Shobaki, K., Hosom, J.P., Kain, A., Wouters, J., Massaro, D., and Cohen, M., "Universal Speech Tools: The CSLU Toolkit," ICSLP-98, vol. 7, pp. 3221-3224, Sydney, Australia, November 1998.

[3] Paul A. Taylor, Alan Black and Richard Caley (1998). "The Architecture of the Festival Speech Synthesis System", in The Third ESCA Workshop in Speech Synthesis, pp. 147-151.

[4] Massaro, D. W., Perceiving Talking Faces: From Speech Perception to a Behavioral Principle. MIT Press: Cambridge, MA, 1998.

Vedi anche:

Immagini: